Welcome back to Teatime! This is a weekly feature in which we sip tea and discuss some topic related to quality. Feel free to bring your tea and join in with questions in the comments section.

Tea of the week: Today I’m living it up with Teavana’s Monkey-picked Oolong tea. A lot of people have complained that it’s not a very good Oolong, but I’m a black tea drinker most of the time, and I found it quite delightful. Your mileage may vary, of course!

Today’s Topic: Measuring Maintainability

Last week we touched on maintainable code, and one design pattern you could use to make your code more maintainable. Today, I wanted to pull back a bit and talk about how to measure the maintainability of your code. What can you use to objectively determine how maintainable your code is and measure improvement over time?

Maintainability

First of all, what is maintainability? The ISO 9126 standard describes five key components:

- Modularity

- Reusability

- Analyzability

- Modifiability

- Testability

(I know you all like the last one ;)). Modular code is known to be a lot easier to maintain than blobs of spaghetti; that’s why procedures, objects, and modules were invented in the first place instead of keeping all code in assembly with gotos. Reusable code is easier to maintain because you can change the code in one place and it’s updated everywhere. Code that is easy to analyze is easier to maintain, because the learning curve is lessened. Code that cannot be modified obviously cannot be maintained at all. And finally, code that is easy to test is easier to maintain because you can test if your changes broke anything (using regression tests, typically unit tests in this case).

These things are hard to measure objectively, though; it’s much easier to give a gut feel guided by these measures than a hard number.

Complexity

Complexity is a key metric to measure when talking about maintainability. Now, before we dive in, I want to touch on the difference between code that is complex and code that is complicated. Complicated code is difficult to understand, but with enough time and effort, can be known. Complexity, on the other hand, is a measure of the number of interactions between entities. As the number of entities grows, the potential interactions between them grows literally exponentially, and at some point, the software becomes too complex for your brain to physically keep in working memory. At that point, nobody really “knows” the software, and it’s difficult to maintain.

An example of 0-complexity code:

print('hello world')

This is Conway’s Game of Life written in a language called APL:

⍎'⎕',∈N⍴⊂S←'←⎕←(3=T)∨M∧2=T←⊃+/(V⌽"⊂M),(V⊖"⊂M),(V,⌽V)⌽"(V,V ←1¯1)⊖"⊂M'

So there’s your boundaries when talking about complexity 🙂

Halstead Complexity

In 1977, Maurice Howard Halstead developed a measure of complexity for C programs in an attempt to quantify software development. He wanted to identify measurable aspects of code quality and calculate the relationships between them. His measure goes something like this:

- Define N1 as the total number of operators in the software. This includes things like arithmatic, equality, assignmant, logical operators, control words, function calls, array definitions, et cetera.

- Define N2 as the total number of operands in the software. This includes identifiers, variables, literals, labels, function names, et cetera. ‘1 + 2’ has one operator and two operands, and ‘1 + 1 + 1’ has three operands and two operators.

- Define n1 as the number of distinct operators in the software; basically, N1 with duplicates removed.

- Define n2 as the number of distinct operands in the software; basically, N2 with duplicates removed.

- The Vocabulary (n) is defined as n1 + n2

- The Length (N) is defined as N1 + N2

- The Volume (V) is defined as N * log2n

- The Difficulty (D) is defined as (n1/2) * (N2/n2)

- The Effort (E) is defined as V * D

- The Time required to write the software is calculated as E/18 seconds

- The number of bugs expected is V/3000.

This seems like magic, and it is, a little bit: it’s not super accurate, but it’s a reasonable starting place. Personally I love the part where you can calculate the time it took you to write the code only after you’ve written it 🙂

Cyclomatic Complexity

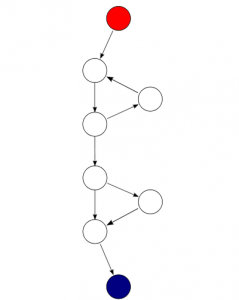

Thankfully, that’s not the only measure we have anymore. A much more popular measure is that of Cyclomatic Complexity, developed by Thomas McCabe Sr in 1976. Cyclomatic Complexity is defined as the number of linearly independent paths through a piece of code. To calculate it, you construct a directed control flow graph, like so:

Then you can calculate the complexity as follows:

- Define E as the number of edges in the graph (lines)

- Define N as the number of nodes in the graph (circles)

- Define P as the number of connected components (a complete standalone segment of the graph which is not connected to any other subgraph; this graph has one component)

- The complexity is computed as E – N + 2P

However, a much more practical method has arisen that is a simplification of the above:

- Start with a complexity of 1

- Add one for every “if”, “else”, “case” or other branch (such as “catch” or “then”)

- Add one for each loop (“while”, “do”, “for”)

- Add one for each complex condition (“and”, “or”)

- The final sum is your complexity.

Automated complexity measuring tools typically use that method of calculation. A function with a Cyclomatic Complexity under 10 is a simple program, 20-50 is complex, and over 50 is very high risk and difficult to test thoroughly.

Bus Factor

Another great measure I like to talk about when talking about maintainability is the Bus Factor[1] of the code. The Bus Factor is defined as the number of developers who would have to be hit by a bus before the project can no longer be maintained and is abandoned. This risk can be mitigated by thorough documentation, but honestly, how many of us document a project super thoroughly? Usually we assume we can ask questions of the senior devs who have their fingers in all the pies. The Bus Factor tells us how many (or how few) devs really worked on a single application over the years.

You can calculate the Bus Factor as follows:

- Calculate the author of each file in the repository (based on the original developer and the number of changes since)

- Remove the author with the most files

- Repeat until 50% of the files no longer have an author. The number of authors removed is your Bus Factor

I created a fun tool you can run on your own repositories to determine the Bus Factor: https://github.com/yamikuronue/BusFactor

The folks at http://mtov.github.io/Truck-Factor/ created their own tool, which they didn’t share, but they found the following results:

- Rails scored a bus factor of 7

- Ruby scored a 4

- Android scored a 12

- Linux scored a 90

- Grunt scored a 1

Most systems I’ve scanned have scored a 1 or a 2, which is pretty scary to be honest. How about you? What do your systems score? Are there any other metrics you like to use?

[1]: Also known as the Lotto Factor at my work, defined as the number of people who would have to win the lottery and quit before the project is unmaintainable.